(More New Topics..New tools added such as GitHub Actions, Helm, GitHub Advanced Security and Trivy Scanner, CheckOv IAC security scan tools included)

About Coach Ananth(or known as Coach AK):

- 🎓 TOGAF-Certified Architect with 24 years of IT experience, including 10+ years in DevOps and Cloud Computing.

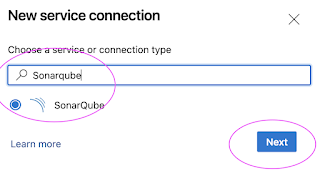

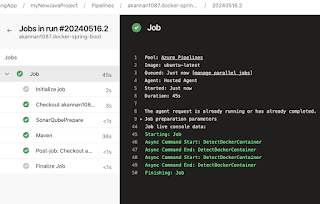

- 🛠 Expertise in DevOps Tools: Git, BitBucket, GitHub, Jenkins, Maven, SonarQube, Nexus, Artifactory, Slack, Terraform, Ansible, Docker, Helm, Prometheus, and Kubernetes on AWS and Azure platforms.

- 🚀 Proven Success: Coached over 3000 professionals, with many placed in top-tier companies.

- 📚 Unique Learning Model: Minimal theory, maximum hands-on labs, focusing on job-relevant skills.

- 🌐 Comprehensive Cloud Training: Master AWS and Azure through a practical, immersive experience.

- ✍️ Personalized Support: Includes resume preparation and one-on-one interview coaching to help candidates stand out.

- 🎉 Milestone Achieved: Celebrating 8 years of successful coaching in August 2025.

- 🤝 Learn from the Best: Led by a Senior DevOps Coach/Architect currently working with a leading IT services company in the U.S.

Here is the coaching model:

- 🚀 12-Week Hands-On DevSecOps Coaching: Gain real-world experience with AWS and Azure cloud platforms.

- 🔧 80% Practical, 20% Theory: Over 50 lab exercises ensure you learn by doing.

- 💻 Comprehensive Tool Coverage: Master Git, GitHub, Jenkins, Maven, SonarQube, Nexus, Terraform, Ansible, Docker, Kubernetes, Helm, Prometheus, and more.

- 👨🏫 Expert-Led Training: Learn from Coach AK, a TOGAF-certified architect with 24+ years of IT experience.

- 🤝 Collaborative Learning: Get access to WhatsApp groups for troubleshooting, discussions, and peer support.

- 📄 Resume & Interview Support: Tailored guidance to make your job applications stand out.

- ✅ Proven Success: Join over 3,000 professionals who’ve advanced their careers with our program.

- 🌟 Flexible Learning Options: Designed to fit your schedule and career goals.

- 💡 Transform Your Career: Believe in yourself—anything is possible!